Introduction

Hello builders, and welcome to the AO Hackathon! At APUS, our mission is to enable verifiable decentralized AI of the AO ecosystem by integrating deterministic GPU computing. We believe that by providing direct, on-chain access to accelerated computing, we unlock the next generation of autonomous agents—making them more intelligent, capable, and powerful than ever before. For this hackathon, we are thrilled to introduce our flagship product: the APUS AI Inference Service. This service gives your AO processes the ability to call a large language model and receive a response, all through a simple, on-chain message. Inspired by the simplicity and elegance of community projects likellama-herder https://github.com/permaweb/llama-herder, our service is designed to be incredibly easy to use, so you can focus on building amazing things.

This guide will walk you through everything from making your first AI call to integrating our service into a full-stack dApp. Let’s get started!

Quick Start In 5 Minutes

-

Install aos

-

Spawn your process & trust APUS HyperBEAM Node

- Open your terminal

-

Spawn your process:

-

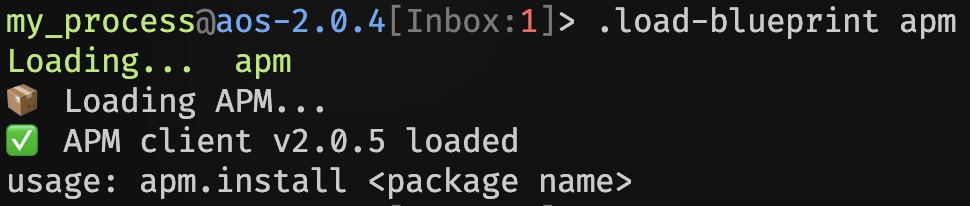

Install APM & APUS Lua Lib

-

Install APM:

-

Wait for APM Loaded (about 2 minutes)

Note: Please wait for the

Note: Please wait for the APM client loadedlogs to load. If it do not appear after a long time, try entering any command in the AOS console to check if the system is stuck.

-

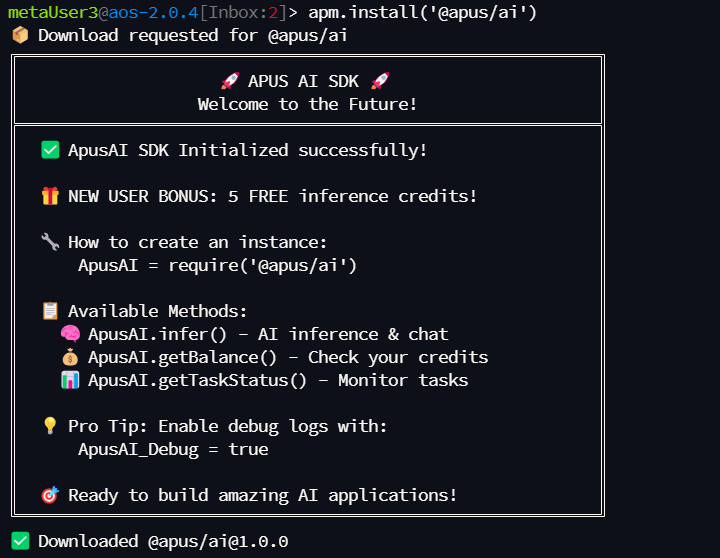

Install Lua Lib:

-

Wait for Lua Lib Installed

Note: Download may take a while. Please wait.

Note: Download may take a while. Please wait.

-

Install APM:

-

Get your credits

- You have 5 default test credits, allowing up to 5 inference calls.

- Apply more credits:(Recommend)

- Join APUS Discord: https://discord.gg/r3aQcJRH5A

-

Go to the

#📥|apus-hackathon-agentchannel. -

Submit Your Application :

🧪 Sample Application Format

-

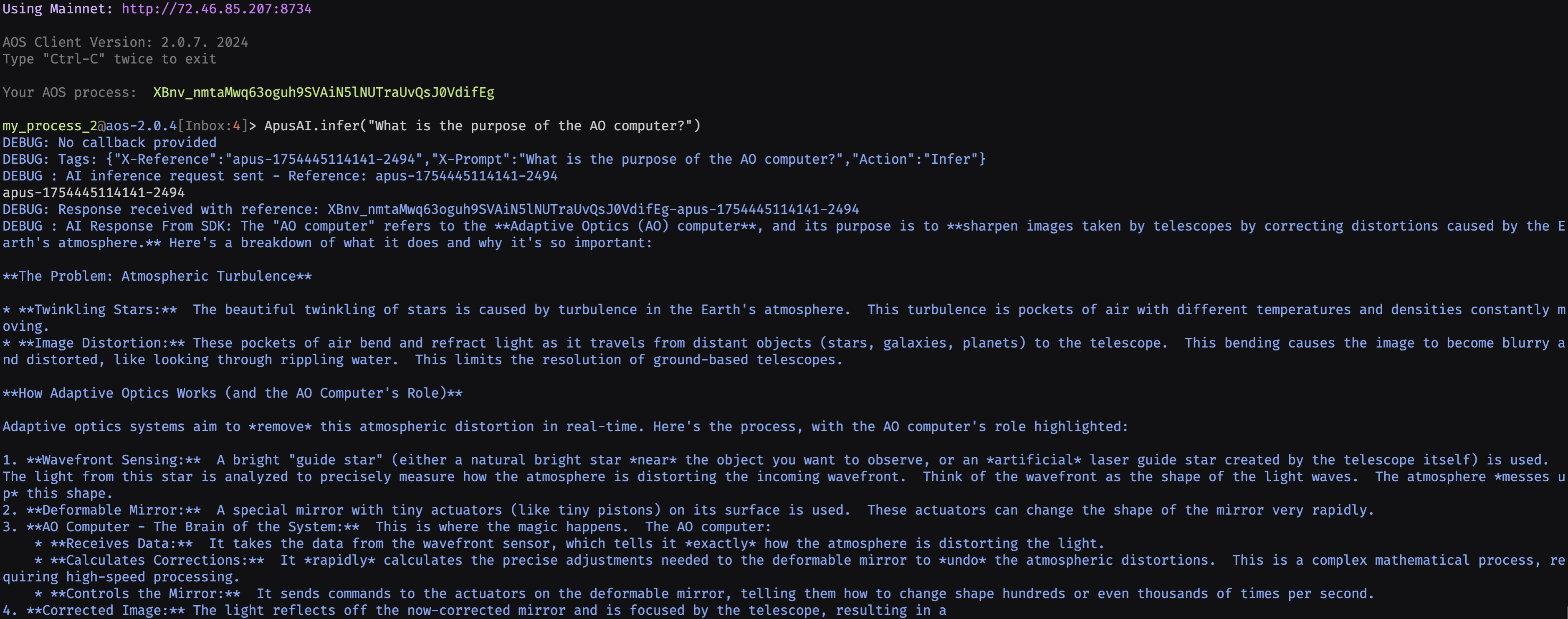

Run AI Inference

-

Load AI Lib

-

Set

ApusAI_Debugto enable inference result logging. If unset, no logs will be shown.

Default balance: 5,000,000,000,000 units (equals 5 credits for 5 inference calls). Credits are non-transferable.

-

Run AI Inference: it takes about 50s

-

Wait for AI Response

-

Load AI Lib

🚀 Next Step: View Complete Project Example

Congratulations! You have successfully run your first AI inference call. Now you can view our complete project example to learn how to build real AI applications: 📖 View Complete Project Example → This example project will demonstrate:- How to deploy AI chat agents on the AO network

- How to integrate APUS AI inference services

- How to build frontend interfaces to interact with AO agents

- Complete developer guides and best practices